Posted On

Posted On

The Neural Network Explained: From Brain-Inspired Models to Modern Deep Learning

Posted On

Posted On

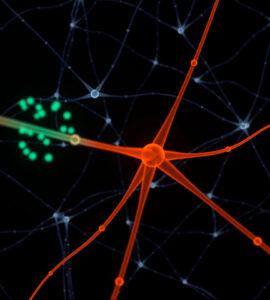

Imagine standing in front of a vast web of lights—each one flickering in response to a signal from another. Now imagine that these lights represent neurons, and the patterns they create form the foundation of how machines learn, adapt, and make decisions. This intricate web is the essence of a neural network—a model inspired by the human brain but built from the language of mathematics and code.

Modern deep learning owes its success to the evolution of these networks. Yet, to truly understand how they work, one must go beyond definitions and dive into the story of how they mimic the brain’s brilliance while shaping the future of AI.

From Neurons to Networks: A Digital Reflection of the Brain

The concept of neural networks began with a simple metaphor: What if we could replicate how the human brain processes information? Each neuron in our brain receives signals, processes them, and passes them on. Similarly, artificial neurons—or nodes—receive numerical inputs, apply mathematical functions, and pass outputs to the next layer.

Early models like the Perceptron in the 1950s laid the groundwork, but they were limited by their simplicity. The idea truly flourished with the advent of deep learning—where networks gained depth through multiple layers capable of abstract thinking. These layers enable machines to distinguish cats from dogs, understand speech, and even generate art.

For learners aiming to understand how such architectures are implemented in practice, an AI course in Chennai offers structured exposure to building, training, and optimising these systems from the ground up.

The Hidden Layers: Where Learning Truly Happens

In a neural network, the “hidden layers” are where the real magic unfolds. Much like how the brain transforms sensory input into perception, these layers turn raw data into meaningful representations.

Each layer refines the input—first identifying broad features, like edges or shapes, then finer details such as patterns or emotions. For example, in image recognition, the first layer might detect lines, the next layer identifies eyes, and later layers discern faces.

This hierarchical process is what makes neural networks so powerful. They learn features automatically, replacing the need for manual feature engineering that once defined traditional machine learning.

Deep networks, however, come with challenges—computational cost, vanishing gradients, and the risk of overfitting. Over time, researchers developed optimisations, activation functions, and regularisation methods to make training more efficient and robust.

The Rise of Deep Learning: Fuelled by Data and Compute Power

Just as a seed requires both sunlight and soil to grow, neural networks need two things to flourish—large amounts of data and powerful hardware. The 2010s provided both.

With GPUs accelerating computations and massive datasets available through the internet, neural networks began to scale exponentially. Models like AlexNet, ResNet, and GPT revolutionised industries—from healthcare diagnostics to autonomous driving and natural language processing.

The reason deep learning feels almost magical today is not mystery—it’s scale. Networks with billions of parameters simulate cognition by adjusting millions of connections to recognise patterns humans might overlook.

As industries race to harness this power, understanding the mathematics and architecture behind these networks has become essential. Training through an AI course in Chennai helps professionals bridge theory with implementation—transforming abstract concepts into practical skill sets.

Ethical and Interpretability Challenges

Despite their power, neural networks are often described as “black boxes.” They make accurate predictions but don’t always explain how. This opacity raises ethical questions in critical domains such as healthcare, finance, and law.

How can we trust a model’s decision if we can’t interpret it? Researchers are now working on explainable AI (XAI), which opens this black box by visualising how decisions are made—techniques like saliency maps or layer-wise relevance propagation show which parts of an input most influenced the output.

However, with great power comes great responsibility. Neural networks can amplify biases present in training data, leading to unfair or discriminatory outcomes. Ensuring transparency and fairness is no longer optional—it’s a professional obligation.

Beyond the Present: The Future of Neural Thinking

The neural networks of tomorrow won’t just process data—they’ll understand context, adapt dynamically, and collaborate with humans. Innovations like spiking neural networks, which mimic how biological neurons communicate using spikes, or transformer architectures that handle massive data sequences, are pushing boundaries even further.

As neural networks become integral to decision-making, the demand for experts who understand their mechanics continues to rise. Building mastery in AI is not merely about coding—it’s about shaping systems that think responsibly, ethically, and intelligently.

Conclusion

Neural networks are the digital embodiment of human curiosity—an attempt to teach machines how to learn and reason. From early brain-inspired models to today’s deep learning architectures, they symbolise the merging of science and imagination.

Their success lies not in replacing humans but in augmenting human potential—helping us solve problems we couldn’t before. For those eager to explore this world, understanding how neural networks function is a first step toward innovation.

With commitment, practice, and expert guidance, anyone can move from curiosity to competence, transforming fascination into skill—and ultimately, vision into intelligence.